Summary | Excerpt | Reviews | Beyond the Book | Read-Alikes | Genres & Themes | Author Bio

This article relates to Day Zero

Science fiction tends to reflect deeper moral issues and fears confronting a society at the time it is written. Storytelling is a safe method to express anxieties about the state of the world. It allows authors and readers an opportunity to explore the murkiness of uncertainty in a non-threatening manner. Reading and discussing sci-fi is a more effective outlet than, say, randomly telling neighbors you are worried their Amazon Alexa might one day turn on them. Books like Day Zero are symptomatic of contemporary angst about artificial intelligence (AI).

Science fiction tends to reflect deeper moral issues and fears confronting a society at the time it is written. Storytelling is a safe method to express anxieties about the state of the world. It allows authors and readers an opportunity to explore the murkiness of uncertainty in a non-threatening manner. Reading and discussing sci-fi is a more effective outlet than, say, randomly telling neighbors you are worried their Amazon Alexa might one day turn on them. Books like Day Zero are symptomatic of contemporary angst about artificial intelligence (AI).

Today, there is increasing concern about AI threatening the future of the human race. In his later years, Stephen Hawking became a vocal critic — even as he used it himself. "The development of full artificial intelligence could spell the end of the human race," Hawking told the BBC in 2014.

Humans are limited by biological evolution, which is dreadfully slow. Soon our species won't be able to compete with AI advances. Machine learning develops at near exponential speeds, Hawking and others have argued. It will eventually — perhaps sooner than we imagine — surpass us. It's not just world-renowned astrophysicists that worry about AI; corporate leaders also recognize the potential dangers of this technology.

Elon Musk is an AI skeptic. The owner of Tesla, SpaceX and StarLink believes we are less than five years away from AI surpassing humans in certain cognitive functions. It has taken us millions of years to evolve to our current level of intelligence. He believes it will not be terribly long until the ratio of human intelligence to AI is similar to that of cats and humans today.

Musk was an early investor in one of the leading AI companies, DeepMind. He claims to have invested in this company not for a profit, but to keep abreast of the latest technological developments. He's also an active proponent of AI government oversight. He doesn't see it happening, though. Realizing corporate interests and techno-libertarians will likely oppose government intervention, Musk created a non-profit foundation, OpenAI. Its goal is to democratize AI research and facilitate scientific and government oversight of developments.

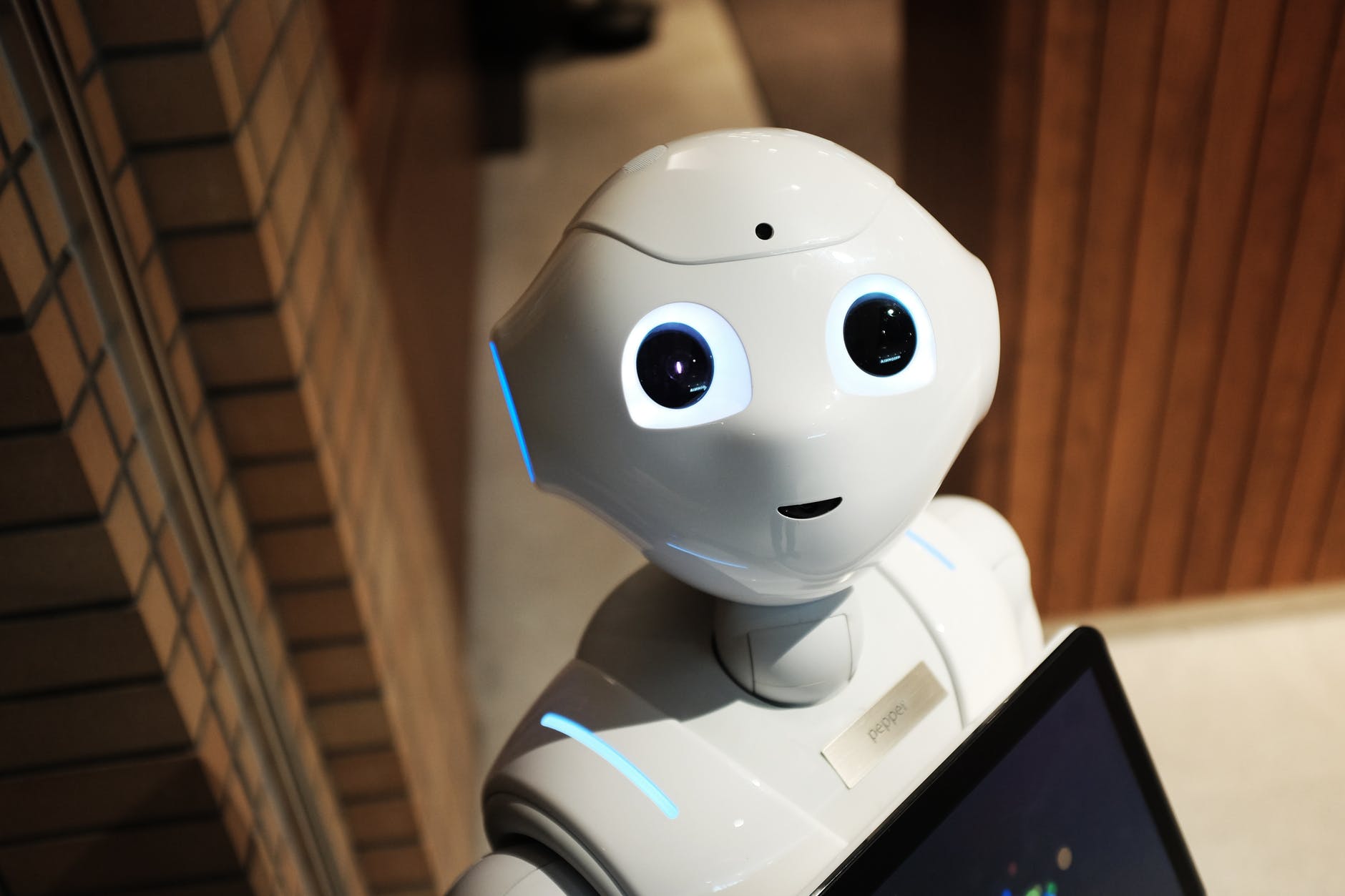

Are these skeptics overreacting? Many argue that AI is just a natural advancement of human ingenuity — a new tool in our arsenal to navigate and change the world around us. Some believe job automation may not be a bad thing. AI-powered robots may even usher in an era of universal basic income. AI development is already benefiting almost all of us living in the Global North. We take it for granted when we:

Steve Wozniak, Elon Musk and dozens of other prominent corporate and scientific figures have signed an open letter on the website of the Future of Life Institute affirming their opposition to autonomized AI weapons development. Letters expressing aversion are great, but it's probably too late. It is rare for any technological development to be slowed when it can be used for military purposes. A considerable amount of our modern technology, including the internet, GPS and nuclear power, is the result of military research.

News reports today still highlight the proliferation of nuclear arms and the development of hypersonic nuclear weapons by Russia. It sells — people know to be scared of nuclear weapons. It's a sideshow, though. The main event in international geopolitics right now is really the AI race between the People's Republic of China and the United States. China is now the world leader in AI development — leapfrogging past the US in total published research and AI patents.

Indubitably, the AI race is being used by both superpowers to develop weapons. The US Department of Defense has already developed an AI algorithm that beat a top-ranked, US fighter pilot in dog fight simulations — all five times they faced each other. Should the US halt development of weaponized AI? What are the implications of stopping such research if other nations – or corporations – choose to pursue it?

What of the existential fear raised in novels such as Day Zero – i.e., will AI eventually displace humanity?

At this point, it seems more likely humans will accidentally use AI to destroy ourselves. Perhaps the real threat is not AI and robots at all. It's the fact that humans continue to develop technologies to gain advantage in violent conflict, rather than to better society. Regardless of what happens, one thing is certain. Anxiety about AI's role in society is going to result in some wild fiction.

Filed under Medicine, Science and Tech

![]() This "beyond the book article" relates to Day Zero. It originally ran in May 2021 and has been updated for the

March 2022 paperback edition.

Go to magazine.

This "beyond the book article" relates to Day Zero. It originally ran in May 2021 and has been updated for the

March 2022 paperback edition.

Go to magazine.

Your guide toexceptional books

BookBrowse seeks out and recommends the best in contemporary fiction and nonfiction—books that not only engage and entertain but also deepen our understanding of ourselves and the world around us.