Summary | Excerpt | Reviews | Beyond the Book | Read-Alikes | Genres & Themes | Author Bio

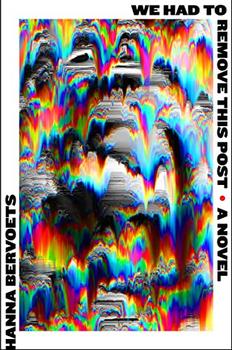

This article relates to We Had to Remove This Post

We Had to Remove This Post by Hanna Bervoets centers on a group of content moderators for a large social media site, who are technically contract workers employed by a smaller, third-party company. Their story and company are fictionalized, but Bervoets draws heavily on material about a 2018 lawsuit by content moderators against Facebook over their working conditions.

We Had to Remove This Post by Hanna Bervoets centers on a group of content moderators for a large social media site, who are technically contract workers employed by a smaller, third-party company. Their story and company are fictionalized, but Bervoets draws heavily on material about a 2018 lawsuit by content moderators against Facebook over their working conditions.

The lawsuit was first filed by Selena Scola, who worked as a Facebook content moderator from June 2017 to March 2018 for a contractor company. Content moderators have to review posts that are flagged for content that violates policies—such as, according to Vice, "hate speech, graphic violence and self harm images and video, nudity and sexual content, bullying"—and decide to remove them or leave them up. At the time of Scola's suit, Vice reported that Facebook had about 7,500 content moderators worldwide, but a year later that number had increased to 15,000.

As early as 2014, Wired was reporting on the world of content moderation, both for Facebook and other companies like YouTube, and its psychological toll—and the ways it wasn't being taken seriously. "There's the thought that it's just the same as bereavement, or bullying at work, and… people can deal with it," one specialist said. But some things aren't normal, or possible to deal with alone. A contract worker in the Philippines reported that constant exposure to gruesome videos made her and her coworkers paranoid, suspecting "the worst of people they meet in real life, wondering what secrets their hard drives might hold," some of them afraid to leave their children alone with babysitters.

In her suit, Scola alleged, according to The Verge, that she "developed PTSD after being placed in a role that required her to regularly view photos and images of rape, murder and suicide." Several other former Facebook moderators joined the suit, saying that Facebook hadn't provided them with a safe workspace.

In 2020, Facebook paid $52 million in a settlement with former and current content moderators as compensation for impacts to their mental health, although the company did not admit to wrongdoing. Each moderator received a minimum of $1,000 and was eligible for extra compensation for diagnoses of mental health disorders such as PTSD. The settlement covered over 11,000 moderators, and lawyers believed that as many as half might qualify for additional pay. Similar cases seem poised to continue. On May 10th of 2022, a former Facebook content moderator in Kenya filed a new lawsuit against owner Meta for offenses, including dangerous working conditions and a lack of protections afforded to workers in some other countries, such as Ireland.

Facebook login screen on mobile browser. Photo by Solen Feyissa, via Unsplash

Filed under Society and Politics

![]() This "beyond the book article" relates to We Had to Remove This Post. It originally ran in June 2022 and has been updated for the

May 2023 paperback edition.

Go to magazine.

This "beyond the book article" relates to We Had to Remove This Post. It originally ran in June 2022 and has been updated for the

May 2023 paperback edition.

Go to magazine.

Heaven has no rage like love to hatred turned, Nor hell a fury like a woman scorned.

Click Here to find out who said this, as well as discovering other famous literary quotes!

Your guide toexceptional books

BookBrowse seeks out and recommends the best in contemporary fiction and nonfiction—books that not only engage and entertain but also deepen our understanding of ourselves and the world around us.